|

Abstract:

Previous research has shown that there is not a single classifier modeling approach suitable for all gesture recognition tasks, therefore, this research investigated which combination of sensor devices and algorithms, set with different parameters, produces higher ArSL recognition accuracy results in a gesture recognition system.

This research proposed eight gesture recognition models: seven for static gesture recognition (Static Proposed Model -SPM) using the two depth sensors -Microsoft’s Kinect and Leap Motion Controller (LMC) - while the eighth was for dynamic gesture recognition (Dynamic Proposed Model –DPM) using only Kinect.

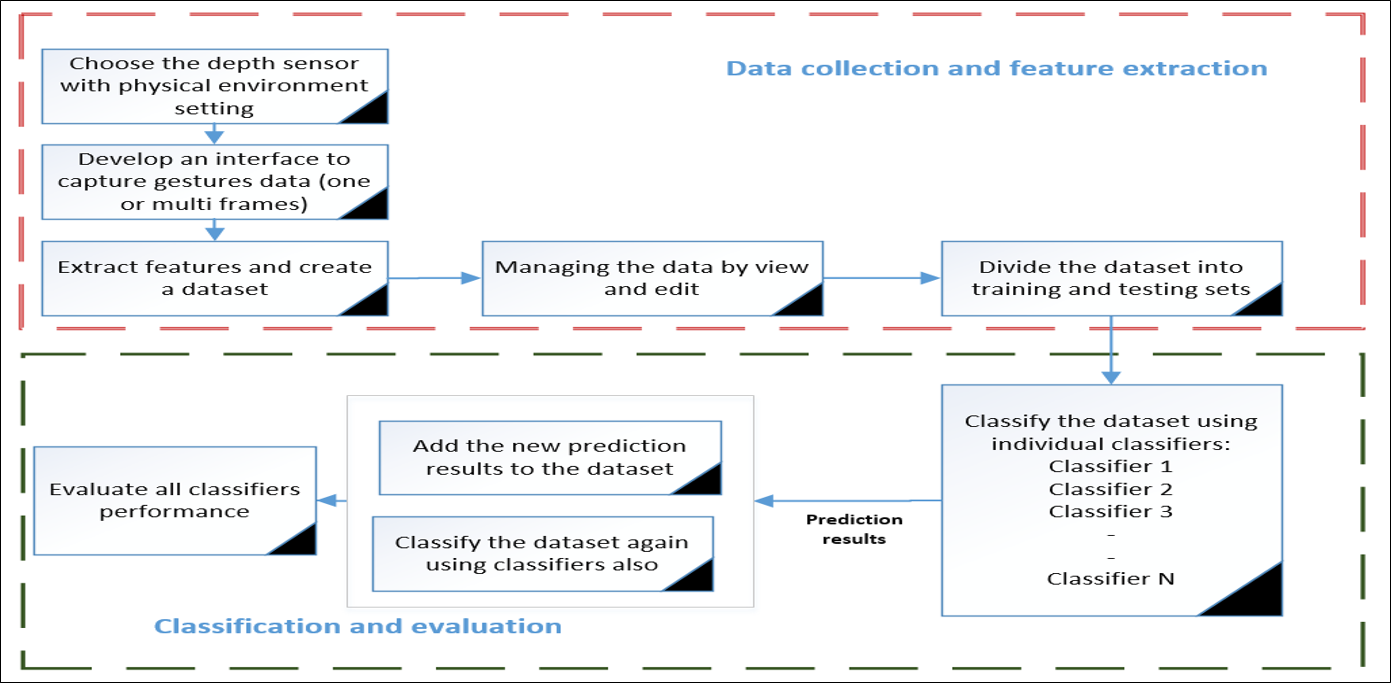

All eight models were developed using the same process: 1) determining the sensors to be used to capture all upper human skeleton joints, 2) creating a prototype interface to collect the dataset, 3) participants gesturing the 28 ArSL letters, 5 static words, and 6 dynamic words, 4) extracting features from the dataset, 5) classifying the dataset observations using supervised machine learning which divides datasets into training and testing sets to train and assess models, and 6) evaluating the performance of the different classifier algorithms using three evaluation metrics (Accuracy, AUC, logLoss).

The proposed models used different algorithms: SPM1, SPM2, and SPM3 each used a single algorithm; Hausdorff, Similarity, and SVM respectively, while, SPM4, SPM5, SPM6, SPM7, and DPM used eleven predictive categories of three algorithms (SVM, RF, KNN).

Methodology:

This figure show the data collection and feature extraction

|